Reports by Guest Contributors

The rapid advancement of artificial intelligence (AI) has propelled it into the heart of decision-making processes, operations, and strategies of large organizations and businesses. This evolution has also raised the crucial question of how to implement effective AI governance principles. In this dynamic landscape, the intersection of technical solutions and societal debates, cultural shifts, and behavioral changes plays a pivotal role.

Effective AI governance in large organizations and businesses encompasses a multifaceted approach. On one hand, there are technical solutions that involve the development and implementation of algorithms, tools, and platforms that uphold ethical principles such as fairness, transparency, and accountability. These solutions include explainability tools and robustness checks, aimed at ensuring AI systems' responsible behavior.

On the other hand, societal debates, cultural shifts, and behavioral changes are equally vital. These involve engaging in public discourse to shape policies and regulations that govern AI use. It also means fostering a cultural transformation within organizations to align their practices with ethical AI principles.

The AI Governance Alliance*1 is a pioneering stakeholder initiative that unites industry leaders, governments, academic institutions, and civil society organizations to champion responsible development, application, and governance of transparent and inclusive AI systems globally. This initiative was born out of the recognition that while numerous efforts exist in the field of AI governance, there is a need for a comprehensive approach that spans the entire lifecycle of AI systems.

The strategic goals of the AI Governance Alliance (to be referred to as “Alliance” in this article) include:

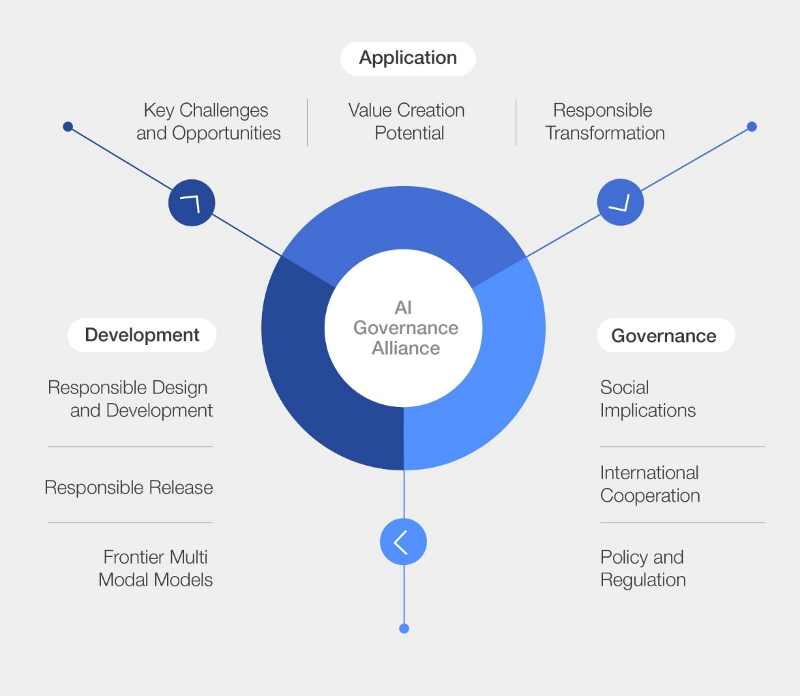

The Alliance structures its efforts around three main workstreams, each targeting different stakeholders and aspects of AI governance. These workstreams are designed to tackle the multifaceted challenges and opportunities presented by AI, particularly focusing on generative AI technologies.

Together, these workstreams encompass a comprehensive approach to AI governance, seeking to ensure that AI technologies are developed and deployed in ways that are responsible, ethical, and beneficial for society. The Alliance's focus on bringing together diverse stakeholders—from corporate leaders and public officials to academics and society leaders—highlights the importance of collaboration across sectors in addressing the challenges and harnessing the opportunities presented by AI.

Source: AI Governance Alliance

Figure 1: Workstreams of AI Governance Alliance

When it comes to responsible AI, the responsibility is shared among developers, users, and regulators. Developers should prioritize fairness, transparency, and safety in AI design, while users are encouraged to deploy AI technologies responsibly and understand their implications. Regulators, in turn, are tasked with creating legal frameworks that aim to ensure safe and ethical AI deployment without stifling innovation.

Balancing responsibility with economic incentives requires a nuanced approach. Responsible AI can lead to sustainable long-term economic benefits by fostering trust and preventing reputational damage caused by unethical AI practices.

Ongoing debates on AI regulation play a pivotal role in establishing effective global frameworks. As AI is a general-purpose technology that moves across borders, international collaboration is key and several aspects need to be considered to prevent a disjointed approach:

Firstly, common standards are essential, as they facilitate interoperability and ensures consistency in AI governance practices across borders.

Secondly, transparency and accountability are critical; organizations should be encouraged to be transparent about their AI applications, and mechanisms should be in place to hold them accountable for the outcomes.

Lastly, regulations must protect fundamental rights, including privacy and non-discrimination, to ensure ethical AI deployment.

In various regions, there is a notable approach to AI regulation characterized by supportive infrastructure and adaptive regulation, fostering innovation. This approach involves investments in AI research hubs, academic partnerships, and public-private collaborations to promote technological advancement. It prioritizes maintaining flexible regulations that can adapt to advancements in AI, ensuring ethical standards are upheld without hindering development.

AI holds tremendous promise in addressing global challenges, with significant potential in fields such as healthcare (e.g., medical imaging and natural language processing), environmental conservation (e.g., climate modeling), and various sectors requiring data-driven insights. To ensure equal access to AI technology worldwide, the focus should be on education and capacity building. This includes introducing AI curricula in educational institutions, providing affordable or free AI education through online platforms, and conducting AI training sessions in regions with limited access to AI education.

Our hope for Japan's role in the Alliance is to leverage its unique strengths in technology and ethics to foster a harmonious integration of AI into society. As a leader in both technological innovation and ethical considerations, Japan is well-positioned to contribute valuable insights into the development of AI governance frameworks that balance innovation with human-centric values.

Japan's proactive role in international AI collaborations, such as its contributions to the OECD AI Policy Observatory*2and its role in the G7 and G20 discussions in AI, exemplifies the significance of cross-border partnerships in establishing a cohesive global AI governance framework. We anticipate that Japan's active participation will not only enrich the Alliance's regional and cultural diversity but also serve as a model for the responsible and ethical application of AI globally.

In conclusion, as AI continues to transform industries and societies, operationalizing effective AI governance principles is paramount. Achieving this balance between innovation and responsibility requires collaborative efforts, adaptive regulation, and a commitment to ensuring the benefits of AI are accessible to all. The Alliance stands as a testament to the commitment of various stakeholders in navigating this complex terrain.

Cathy Li

Head, AI, Data and Metaverse; Deputy Head, C4IR

World Economic Forum

Head of AI, Data and Metaverse at the World Economic Forum since 2018. Previously, she was a Partner at GroupM, WPP's media investment arm and also held various leadership positions across the vast portfolio of the WPP's business including digital media buying, data and analytics, content, strategic consulting and financial communications in London, New York and Beijing. Currently Cathy is spearheading the‘AI Governance Alliance’at WEF – A multistakeholder initiative that unites industry leaders, governments, academic institutions, and civil society organisations, to champion responsible global design and release of transparent and inclusive AI systems.

Author’s Introduction

Cathy Li

Head, AI, Data and Metaverse; Deputy Head, C4IR

World Economic Forum

Future Prospects through Generative AI

We provide you with the latest information on HRI‘s periodicals, such as our journal and economic forecasts, as well as reports, interviews, columns, and other information based on our research activities.

Hitachi Research Institute welcomes questions, consultations, and inquiries related to articles published in the "Hitachi Souken" Journal through our contact form.